I'd like to open this discussion with a picture.

Do you see it? No? Here, let me try again:

How 'bout now? Still don't get it? Okay, I'll explain:

You've probably noticed that you don't need to upgrade your computer very often anymore. Upgrades these days are still cool and all, but they're just a difference in magnitude: slightly better framerates, slightly higher graphics settings, but there's never anything groundbreaking about it. A five year old computer should be able to play any new game released today, and a good three year old computer will still be able to play new games on the highest settings.

Things used to be different. Back in the 1990s and early 2000s you had to upgrade your computer every couple of years - ideally every 18 months - and it was a difference in kind. Back then it was expected that a two year old computer would struggle to play a new game. If you wanted to keep playing new, demanding games, you had to buy new hardware - frequently.

What we're looking at in the above pictures is, I think, a return to that 18 month upgrade cycle. It's not going to happen today. Not all of the pieces are there yet, but they're coming, and they're coming a lot faster than I thought they were. The first round of middleware was shown off at GDC 2008 and will take some time to polish, and then we're maybe two or three AAA release cycles after that before the powderkeg explodes.

Save your shekels, son: Doom 5 is going to require real-time hardware raytracing.

1.) CPUs

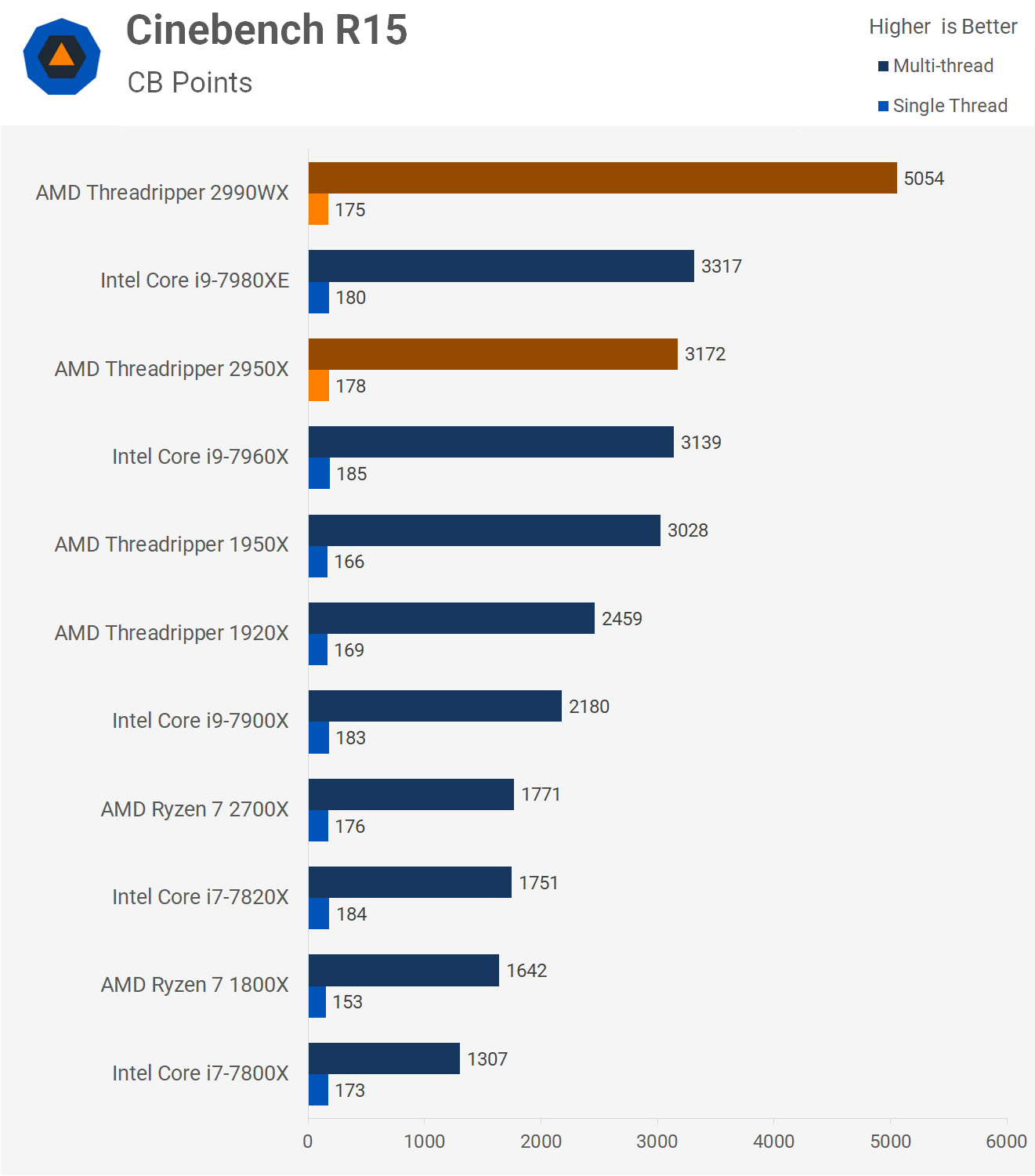

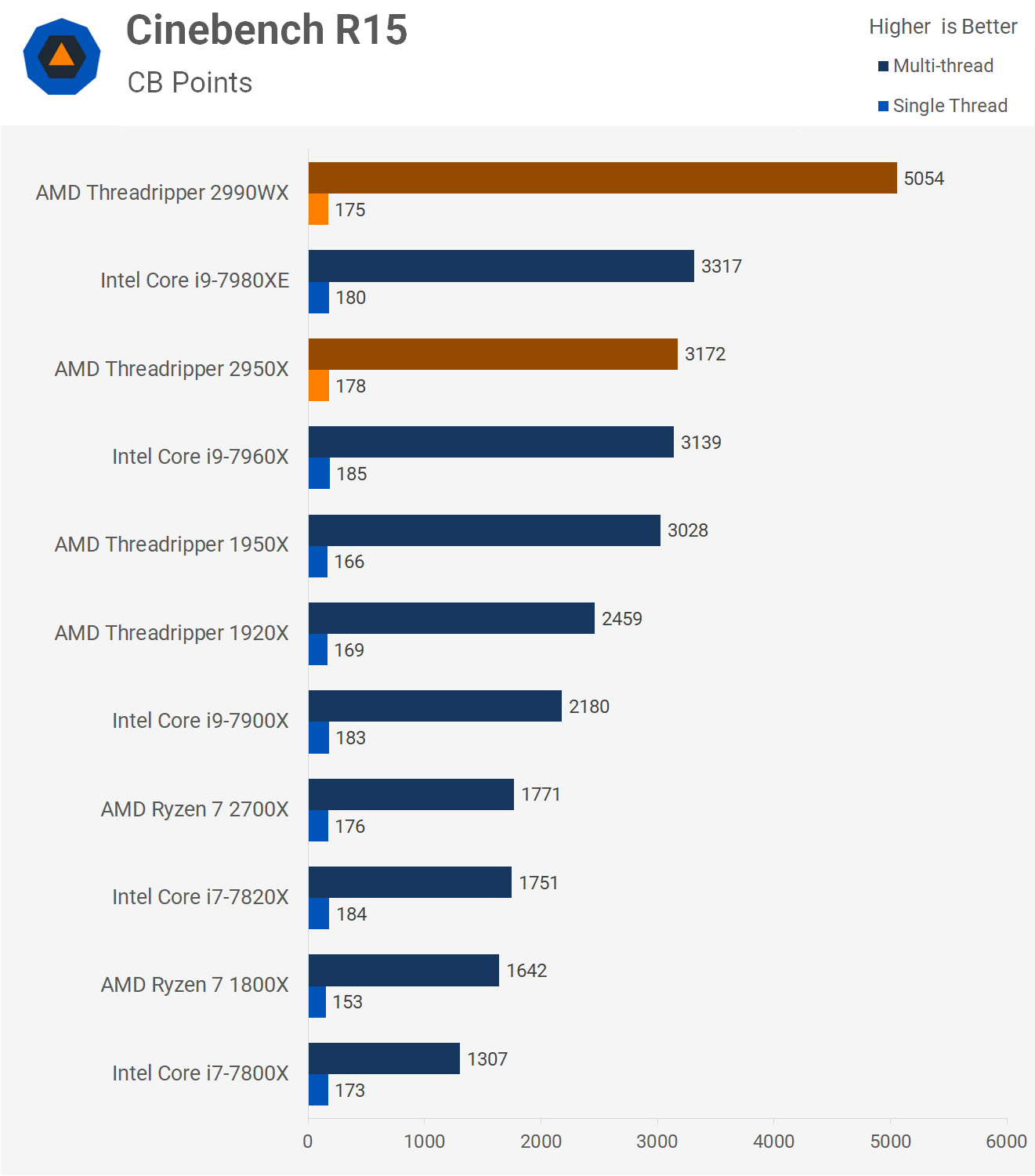

If you aren't familiar with Threadripper: it's an enthusiast grade, high end desktop/gaming CPU with anywhere from 8 to 32 cores, each with 2 hardware threads (16 to 64 logical processors on a single socket). Threadripper chips are usually clocked slower than lower core count CPUs, trading single-core performance for ridiculous parallel instruction throughput.

Threadripper is, without exaggeration, the most interesting thing to happen in the CPU space since Athlon 64. It's one of those crazy gambits you almost never see out of a big company, this wild guess that, if someone makes a CPU offering this tradeoff, someone else will find something to do with it.

For a consumer part, that 'someone' will almost certainly be a game developer. And for a SISD integer part, that 'something' means gameplay (as in, revolutionary changes to). There are three major areas where modern games are more or less in the stone ages: interactivity, world mutability, and AI. All lend themselves well to CPUs and quite abysmally to GPUs, so my educated guess is that we're going to see revolutionary changes in likely all of these areas. This latter step hasn't happened yet, but the more the core wars heat up, the more likely it does happen.

Speaking of core wars, it's not certain when Intel will become a part of this discussion. They come nowhere close to competing against AMD at the top of the HEDT space, and even where they do have performance competitive chips, Intel's parts are several times more expensive (or an embarrassing fraud). Not actually sure what they're thinking here - maybe their HEDT yields are so low that they actually can't be price competitive?

The good news for Intel is that they've actually been working in this space for a long time: Project Larrabee and Xeon Phi. If the throughput/single thread perf tradeoff gets important enough, they'll at least have something they can bring to market... eventually. Much like their current HEDT strategy, though, Intel whiffed the Xeon Phi consumer market to focus on the crazytown overpriced server market. As usual, they'll probably have to take a protracted beat-down before they change course.

2.) GPUs

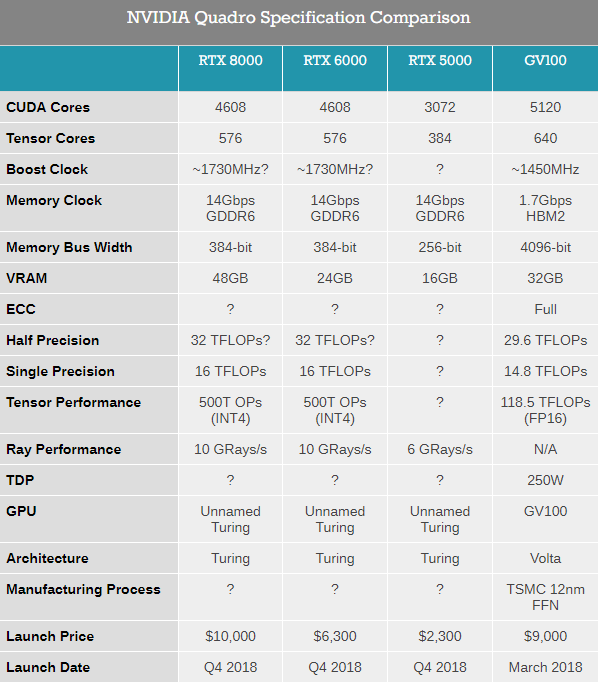

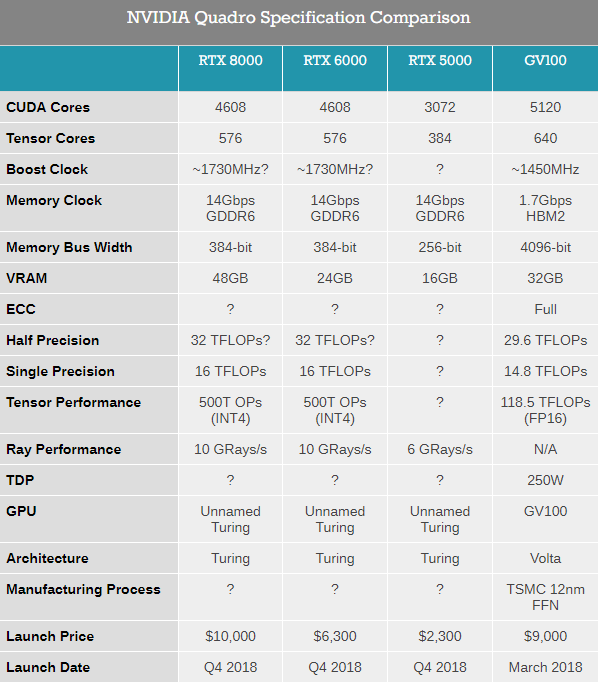

Nvidia announced their next-generation workstation cards at SIGGRAPH, with the Turing-based Quadro RTX 8000 as flagship. It's a safe guess that the next-generation GeForce RTX 2080 will be based on this chip (name not officially announced, but teased heavily during the presentation).

Why should you care?

For starters: Based on the numbers presented, I estimate the RTX 2080 could be as much as twice as fast as the GTX 1080 - which is still an incredibly powerful card, more than two years after it released. That's very cool.

More importantly: like the Quadro RTX, it will have hardware accelerated raytracing - Nvidia Gameworks support confirmed. The Quadro RTX 8000 will be capable of 10 billion hardware rays per second, or 60 FPS, full HD at 80 samples per pixel. This brings hardware raytracing forward from some fuzzy, noisy thing occasionally useful for occlusion queries, to something that could actually replace raster graphics entirely. (For comparison, the GTX 1080 can cast 100-125 million rays per second.)

Honestly not sure what else I can say about this. They showed an Unreal Engine 4 real-time raytracing demo at this year's GDC. It involved dozens of servers each with several Quadro cards. Six months later, and we're talking about most of that horsepower on a single card. Holy moly.

3.) VR

Nothing interesting. Here's the thing, though. Major movement in VR fits with my overall thesis:

GPU support for foveated rendering is already there, it just needs some super high res screens and the right eye tracking tech. People are working on that, but they don't exist yet. If that eye tracking tech could also report on accommodation then, along with hardware raytracing, you have something that's just as good as a light field display - for a fraction of the cost. And with foveated rendering cutting down the quality of 99% of the display, you're gonna have an absurd ray budget to spend on the fovea.

The other part is the high core count gameplay revolution. VR games starve for interactivity - static environments are almost painful to be in. Imagine having the CPU budget where you could just, like, pick up a sledgehammer and start tearing down the drywall. Or, some day, having the CPU budget to start talking to an NPC and then they talk back. Crazy, right? Today it is. If the average computer had thousands of CPU cores, maybe not.

And that's the world I see coming for PC hardware. Back in the 1990s and early 2000s, we'd upgrade to get a faster clock speed or more RAM. Then for a teeny tiny little while there in the late 'naughts, it seemed like we were going to the same thing for core counts... but that never happened. I think it's finally going to happen. I think we're going to get games that gobble computer power again, CPUs competing on geometrically increasing core counts, and GPUs competing on geometrically increasing rays per second. And every 18 months, you'll have to upgrade because you don't have enough cores or enough rays. (IMO).

Do you see it? No? Here, let me try again:

How 'bout now? Still don't get it? Okay, I'll explain:

You've probably noticed that you don't need to upgrade your computer very often anymore. Upgrades these days are still cool and all, but they're just a difference in magnitude: slightly better framerates, slightly higher graphics settings, but there's never anything groundbreaking about it. A five year old computer should be able to play any new game released today, and a good three year old computer will still be able to play new games on the highest settings.

Things used to be different. Back in the 1990s and early 2000s you had to upgrade your computer every couple of years - ideally every 18 months - and it was a difference in kind. Back then it was expected that a two year old computer would struggle to play a new game. If you wanted to keep playing new, demanding games, you had to buy new hardware - frequently.

What we're looking at in the above pictures is, I think, a return to that 18 month upgrade cycle. It's not going to happen today. Not all of the pieces are there yet, but they're coming, and they're coming a lot faster than I thought they were. The first round of middleware was shown off at GDC 2008 and will take some time to polish, and then we're maybe two or three AAA release cycles after that before the powderkeg explodes.

Save your shekels, son: Doom 5 is going to require real-time hardware raytracing.

1.) CPUs

If you aren't familiar with Threadripper: it's an enthusiast grade, high end desktop/gaming CPU with anywhere from 8 to 32 cores, each with 2 hardware threads (16 to 64 logical processors on a single socket). Threadripper chips are usually clocked slower than lower core count CPUs, trading single-core performance for ridiculous parallel instruction throughput.

Threadripper is, without exaggeration, the most interesting thing to happen in the CPU space since Athlon 64. It's one of those crazy gambits you almost never see out of a big company, this wild guess that, if someone makes a CPU offering this tradeoff, someone else will find something to do with it.

For a consumer part, that 'someone' will almost certainly be a game developer. And for a SISD integer part, that 'something' means gameplay (as in, revolutionary changes to). There are three major areas where modern games are more or less in the stone ages: interactivity, world mutability, and AI. All lend themselves well to CPUs and quite abysmally to GPUs, so my educated guess is that we're going to see revolutionary changes in likely all of these areas. This latter step hasn't happened yet, but the more the core wars heat up, the more likely it does happen.

Speaking of core wars, it's not certain when Intel will become a part of this discussion. They come nowhere close to competing against AMD at the top of the HEDT space, and even where they do have performance competitive chips, Intel's parts are several times more expensive (or an embarrassing fraud). Not actually sure what they're thinking here - maybe their HEDT yields are so low that they actually can't be price competitive?

The good news for Intel is that they've actually been working in this space for a long time: Project Larrabee and Xeon Phi. If the throughput/single thread perf tradeoff gets important enough, they'll at least have something they can bring to market... eventually. Much like their current HEDT strategy, though, Intel whiffed the Xeon Phi consumer market to focus on the crazytown overpriced server market. As usual, they'll probably have to take a protracted beat-down before they change course.

2.) GPUs

Nvidia announced their next-generation workstation cards at SIGGRAPH, with the Turing-based Quadro RTX 8000 as flagship. It's a safe guess that the next-generation GeForce RTX 2080 will be based on this chip (name not officially announced, but teased heavily during the presentation).

Why should you care?

For starters: Based on the numbers presented, I estimate the RTX 2080 could be as much as twice as fast as the GTX 1080 - which is still an incredibly powerful card, more than two years after it released. That's very cool.

More importantly: like the Quadro RTX, it will have hardware accelerated raytracing - Nvidia Gameworks support confirmed. The Quadro RTX 8000 will be capable of 10 billion hardware rays per second, or 60 FPS, full HD at 80 samples per pixel. This brings hardware raytracing forward from some fuzzy, noisy thing occasionally useful for occlusion queries, to something that could actually replace raster graphics entirely. (For comparison, the GTX 1080 can cast 100-125 million rays per second.)

Honestly not sure what else I can say about this. They showed an Unreal Engine 4 real-time raytracing demo at this year's GDC. It involved dozens of servers each with several Quadro cards. Six months later, and we're talking about most of that horsepower on a single card. Holy moly.

3.) VR

Nothing interesting. Here's the thing, though. Major movement in VR fits with my overall thesis:

GPU support for foveated rendering is already there, it just needs some super high res screens and the right eye tracking tech. People are working on that, but they don't exist yet. If that eye tracking tech could also report on accommodation then, along with hardware raytracing, you have something that's just as good as a light field display - for a fraction of the cost. And with foveated rendering cutting down the quality of 99% of the display, you're gonna have an absurd ray budget to spend on the fovea.

The other part is the high core count gameplay revolution. VR games starve for interactivity - static environments are almost painful to be in. Imagine having the CPU budget where you could just, like, pick up a sledgehammer and start tearing down the drywall. Or, some day, having the CPU budget to start talking to an NPC and then they talk back. Crazy, right? Today it is. If the average computer had thousands of CPU cores, maybe not.

And that's the world I see coming for PC hardware. Back in the 1990s and early 2000s, we'd upgrade to get a faster clock speed or more RAM. Then for a teeny tiny little while there in the late 'naughts, it seemed like we were going to the same thing for core counts... but that never happened. I think it's finally going to happen. I think we're going to get games that gobble computer power again, CPUs competing on geometrically increasing core counts, and GPUs competing on geometrically increasing rays per second. And every 18 months, you'll have to upgrade because you don't have enough cores or enough rays. (IMO).